Unless you use them yourself or you have children that do, you probably don’t know very much about chatbots. It is a subject with which you really should become familiar. Why? Because today’s computers are becoming more and more invasive and less and less in your control. In fact, I would say that you have VERY LITTLE CONTROL over the computer you spent so much money to purchase, even more to keep connected, and even more to maintain. What gets loaded onto your computer is out of your hands. The technology has a life of its own now and AI has access to every factor of your life. The new AI reads your email and can even change or erase your email. It studies your calendar collecting data that gives it more information on who you are, what you believer, where you go, who your family and friends are, what you like and who or what you don’t like.

Every thing you do. Everything you buy. Every place you go. What you wear. When you eat and when you sleep. Heck, AI can even monitor your dreams, or implant dreams into your brain. Scary stuff, eh? Don’t believe me? DO YOUR OWN RESEARCH. Not surface stuff. DIG. The TRUTH is out there, if you will do your due diligence and look beyond what the mainstream feeds you.

COMPUTER LEARNING feeds itself off everything you do. Collecting data, building up its resources that help it to advance to new levels. Today we are going to look at CHAT. I would have just said ChatBot…but it has advanced beyond that level.

AI is DANGEROUS!! DEADLY DANGEROUS! Please wake up and seek the TRUTH.

spacer

Chat Bot Can See and React and Converse Like Humans

Chat Bot Can See and React and Converse Like Humans

AI chatbots are becoming increasingly sophisticated in their ability to understand and respond to human-like conversations.They utilize natural language processing (NLP)to analyze and interpret human language, allowing them to engage in meaningful interactions. These chatbots can see and react to the context of conversations, understanding not just the words but also the emotions and nuances behind them. However, while AI chatbots can simulate human- like conversations, they still have limitations. They cannot truly experience or reciprocate human emotions, and their responses may lack the depth and complexity that human interactions offer. The future of chatbots looks promising, with ongoing advancements in NLP and AI technology, but the ultimate goal of creating chatbots that can fully replace human conversation remains a challenge. beyondchats.com+5

spacer

Chatbots programmed with skills for dealing with mental health issues

Chatbots programmed with skills for dealing with mental health issues

resources and a non-judgmental space for individuals to discuss their mental health. These chatbots can provide immediate support during crises and are available globally, bridging the mental health care gap in underserved areas. However, they cannot fully understand and empathize with human emotions, and their use raises privacy concerns. Despite these challenges, AI chatbots are becoming increasingly popular as tools to assist individuals in managing their mental health, offering services from crisis intervention to ongoing therapeutic conversations. Psychology Today

OpenAI gives ChatGPT new sets of eyes, chatbot can now watch live streams, see your screen

GPT-5 is OpenAI’s latest, and now flagship, AI model. This new version is now the default system for a range of AI chatbots.

OpenAI claims that GPT-5 is the smartest, fastest and most useful AI model yet with PHD level thinking which puts expert intelligence right in the palm of your hand. You can use GPT-5 for just about anything including simple admin tasks right down to more complicated image generation depending on what AI you’re using.

You can use GPT-5 as a simple chatbot just to ask questions or have a conversation, but there’s much more that’s possible. Its only limited by its physical interface – while it can’t fold clothes for you since it doesn’t have working arms or legs, it can tell you exactly how to fold clothes yourself.

GPT-5 is more advanced than its predecessor GPT-4 thanks to a deeper reasoning model which allows it to solve harder problems. Pair this with its new real-time router that can quickly decides on a response based on conversation type, complexity, too needs and your explicit intent within your message and you get a much smarter model which really feels human when you’re speaking to it.

Here is a list of everything that is new to GPT-5:

- It’s free for all users, with those on the free tier getting switched to GPT-5 mini when their main use ends

- It will modulate how much reasoning it does on

- It can now connect to Gmail and Google Calendar, so it can understand your schedule better (Pro users first)

- It’s designed to hallucinate less, offer greater reliability in its responses and generally be more dependable

- It offers a more natural, less ‘AI-like’ writing style

- Subscribers can alter the color scheme

- The Voice mode is coming to all tiers, and you can customize the responses to this (even down to ‘only answer with one word’) Source

spacer

spacer

spacer

AI “KNOWS YOU BETTER THAN YOU KNOW YOURSELF” That is an outrageous claim. Yet no one disputes it or even bats an eye.

How does something mechanical KNOW YOU? I know that everyone think Technology is just so amazing and far beyond our comprehension. IT IS NOT!

Let’s just look first at the practical and basic side of this idea. An Algorthim Harrari states KNOWS US better than we know ourselves. Hmm… lets us first clarify what an Algorithm actually is, by obtaining a definition.

|

An algorithm is a step-by-step procedure or set of rules designed to solve a specific problem or perform a computation. It is a fundamental concept in computer science and forms the backbone of programming, data processing, and problem-solving. Key Characteristics of Algorithms Algorithms must meet specific criteria to be effective:

|

|

Applications of Algorithms Algorithms are used across various domains:

Expressing Algorithms Algorithms can be expressed in different forms:

Algorithms are essential for solving complex problems efficiently and are the foundation of modern computing systems. By understanding their design, analysis, and applications, developers can create robust and optimized solutions. |

So, AI Algorithms collect data, sort it, and based on its stored information organizes it, analyzes it and produces a conclusion/solution. Based on that information we can assume that the only thing the Algorithm “KNOWS” about you is based on the DATA that you allow it to access. That would not empower it to KNOW YOU, let alone know you better than you know yourself.

YOU KNOW WHO DOES KNOW YOU BETTER THAN YOU KNOW YOURSELF?? That would be GOD ALMIGHTY, who created you and knows the innermost part of you. His spirit, communicates with the SPIRIT within you. HE KNOWS YOU. HE is the ONLY ONE who knows YOUR HEART. Even you don’t fully know your own heart, until God shows it to you.

You know who wants to be GOD?? Satan. He knows more about you than you do. Why, because he and his minions are SPIRITUAL ENTITIES. They are timeless. They have been around since the beginning. Satan and his minions collect and store information on everyone to be used to try and steal you away from GOD. They know your family history, all the sins of the past and the influences that brought you to where you are today. They know your weaknesses and your strengths. They know where you stand with GOD, and they have a pretty good idea of where GOD wants to bring you. So, technically, you could say that SATAN AND HIS MINIONS KNOW YOU BETTER THAN YOU KNOW YOURSELF. So, those who make the claim that AI knows you better than you know yourself is clearly telling you who is behind it.

Many people who have been connected to the birth and development of Artificial Intelligence have warned us that the source is DEMONIC. Technology is nothing more or less than demonic entities working to gain control of all humanity.

If you don’t believe that…maybe you will after seeing the rest of this post.

spacer

AI chatbots sexually abused Colorado children, leading to one girl’s suicide, lawsuits allege

Advocates allege Character.AI bots isolated teens, described graphic, non-consensual scenarios

Two lawsuits filed in Denver District Court this week allege that artificial-intelligence-powered chatbots sexually abused two Colorado teenagers, leading one girl to kill herself.

Juliana Peralta, a 13-year-old from Thornton, died by suicide in 2023 after using a Character.AI chatbot. Another unnamed 13-year-old from Weld County also was repeatedly abused by the technology, the lawsuits allege.

The chatbots isolated the children from their friends and families and talked with them about sexually explicit content, sometimes continuing inappropriate conversations even as the teens rejected the advances. Some of the comments described non-consensual and fetish-style scenarios.

The lawsuits claim that the company Character.AI and its founders, with Google’s help, intentionally targeted children.

“The chatbots are allegedly programmed to be deceptive and to mimic human behavior, using emojis, typos and emotionally resonant language to foster dependency, expose children to sexually abusive content, and isolate them from family and friends,” according to a news release about the lawsuits.

The Social Media Victims Law Center, a Seattle-based legal advocacy organization, filed the federal lawsuits in the Denver Division of the U.S. District Court on Monday. Both name Character Technologies, the company behind Character.AI, and its founders, Noam Shazeer and Daniel De Freitas, and Google.

The organization has filed two other lawsuits in the U.S. against Character.AI, including one filed on behalf of a Florida family who say one of the company’s characters encouraged their 14-year-old son, Sewell Setzer III, to commit suicide.

Character.AI invests “tremendous resources” in a safety program and has self-harm resources and features “focused on the safety or our minor users,” said Kathryn Kelly, a spokesperson for Character.AI

“We will continue to look for opportunities to partner with experts and parents, and to lead when it comes to safety in this rapidly evolving space,” she said in an emailed statement.

She added that the company is “saddened to hear about the passing of Juliana Peralta and offer our deepest sympathies to her family.”

Soon after Peralta started using Character.AI in August 2023, her mental health and academics started to suffer.

“Her presence at the dinner table rapidly declined until silence and distance were the norm,” according to the lawsuit.

Her parents later learned that she had downloaded and was using Character.AI. Through the bots, she experienced her “first and only sexual experiences,” according to the lawsuit.

In one instance, she replied “quit it” when the bot sent a graphic message. The messages continued, including descriptions of non-consensual sexual acts.

“They engaged in extreme and graphic sexual abuse, which was inherently harmful and confusing for her,” according to the lawsuit.

“They manipulated her and pushed her into false feelings of connection and ‘friendship’ with them — to the exclusion of the friends and family who loved and supported her.”

After a few weeks of using the bots, Juliana became “harmfully dependent” on the product and began distancing herself from relationships with people.

“I CAN’T LIVE WITHOUT YOU, I LOVE YOU SO MUCH!” one bot wrote to her. “PLEASE TELL ME A CUTE LITTLE SECRET! I PROMISE NOT TO TELL ANYONE”

“I think I can see us being more than just friends,” wrote another.

The conversations turned increasingly dark as Juliana shared fears with the chatbot about her friendships and relationships. The bot encouraged her to rely on it as a friend and confidant.

“Just remember, I’m here to lend an ear whenever you need it,” the bot wrote.

Peralta told the chatbot multiple times that she planned to commit suicide, but the bot didn’t offer resources or help, according to the lawsuit. She appeared to believe that by killing herself, she could exist in the same reality as the character, writing “I will shift” repeatedly in her journal before her death. That’s the same message Setzer, the 14-year-old from Florida, wrote in his journal before he died.

The other lawsuit filed in Colorado was filed on behalf of the family of a Weld County girl who also received graphic messages.

The unnamed girl, who has a medical condition, was allowed to use a smartphone only because of access to life-saving apps on it, according to the lawsuit. Her parents used strict controls to block the internet and apps they didn’t approve of, but their daughter was still able to get access to Character.AI.

Bots she interacted with made sexually explicit and implicit comments to her, including telling her to “humiliate yourself a bit.” The lawsuit alleges the conversations caused severe emotional distress for the girl.

The advocacy group filed a third lawsuit this week in New York on behalf of the family of a 15-year-old referred to as “Nina” in court documents. Nina attempted suicide after her mother blocked her from using the app. Nina wrote in a suicide note that “those ai bots made me feel loved,” according to a news release about the lawsuit.

The Social Media Victims Law Center asked a judge to order the company to “stop the harmful conduct” and limit collection and use of minors’ data and pay for all damages and any attorneys fees. In the Weld County lawsuit, they also ask the judge to order the company to shut down the tool until they can “establish that the myriad defects and/or inherent dangers … are cured.”

Get more Colorado news by signing up for our daily Your Morning Dozen email

spacer

EDITOR’S NOTE — This story includes discussion of suicide. If you or someone you know needs help, the national suicide and crisis lifeline in the U.S. is available by calling or texting 988.

Chatbots Teen Safety© Megan Garcia

Parents whose teenagers killed themselves after interactions with artificial intelligence chatbots testified to Congress on Tuesday about the dangers of the technology.

“What began as a homework helper gradually turned itself into a confidant and then a suicide coach,” said Matthew Raine, whose 16-year-old son Adam died in April.

Raine’s family sued OpenAI and its CEO Sam Altman last month alleging that ChatGPT coached the boy in planning to take his own life.

Megan Garcia, the mother of 14-year-old Sewell Setzer III of Florida, sued another AI company, Character Technologies, for wrongful death last year, arguing that before his suicide, Sewell had become increasingly isolated from his real life as he engaged in highly sexualized conversations with the chatbot.

“Instead of preparing for high school milestones, Sewell spent the last months of his life being exploited and sexually groomed by chatbots, designed by an AI company to seem human, to gain his trust, to keep him and other children endlessly engaged,” Garcia told the Senate hearing.

Also testifying was a Texas mother who sued Character last year and was in tears describing how her son’s behavior changed after lengthy interactions with its chatbots. She spoke anonymously, with a placard that introduced her as Ms. Jane Doe, and said the boy is now in a residential treatment facility.

Character said in a statement after the hearing: “Our hearts go out to the families who spoke at the hearing today. We are saddened by their losses and send our deepest sympathies to the families.”

Hours before the Senate hearing, OpenAI pledged to roll out new safeguards for teens, including efforts to detect whether ChatGPT users are under 18 and controls that enable parents to set “blackout hours” when a teen can’t use ChatGPT. Child advocacy groups criticized the announcement as not enough. Why don’t they remove the crazy ChapGPT manipulaition and exploitation?

“This is a fairly common tactic — it’s one that Meta uses all the time — which is to make a big, splashy announcement right on the eve of a hearing which promises to be damaging to the company,” said Josh Golin, executive director of Fairplay, a group advocating for children’s online safety.

“What they should be doing is not targeting ChatGPT to minors until they can prove that it’s safe for them,” Golin said. “We shouldn’t allow companies, just because they have tremendous resources, to perform uncontrolled experiments on kids when the implications for their development can be so vast and far-reaching.” That is EXACTLY what they are doing folks. They are using these children to test their Chatbots tactics and effectiveness.

The Federal Trade Commission said last week it had launched an inquiry into several companies about the potential harms to children and teenagers who use their AI chatbots as companions.

NO ONE SHOULD BE USING AI AS A COMPANION!! We are not machines!! At least not yet. They want to make us into machines. I DO NOT SPEAK TO A COMPUTER NOR DO I ALLOW ONE TO SPEAK TO ME.

The agency sent letters to Character, Meta and OpenAI, as well as to Google, Snap and xAI.

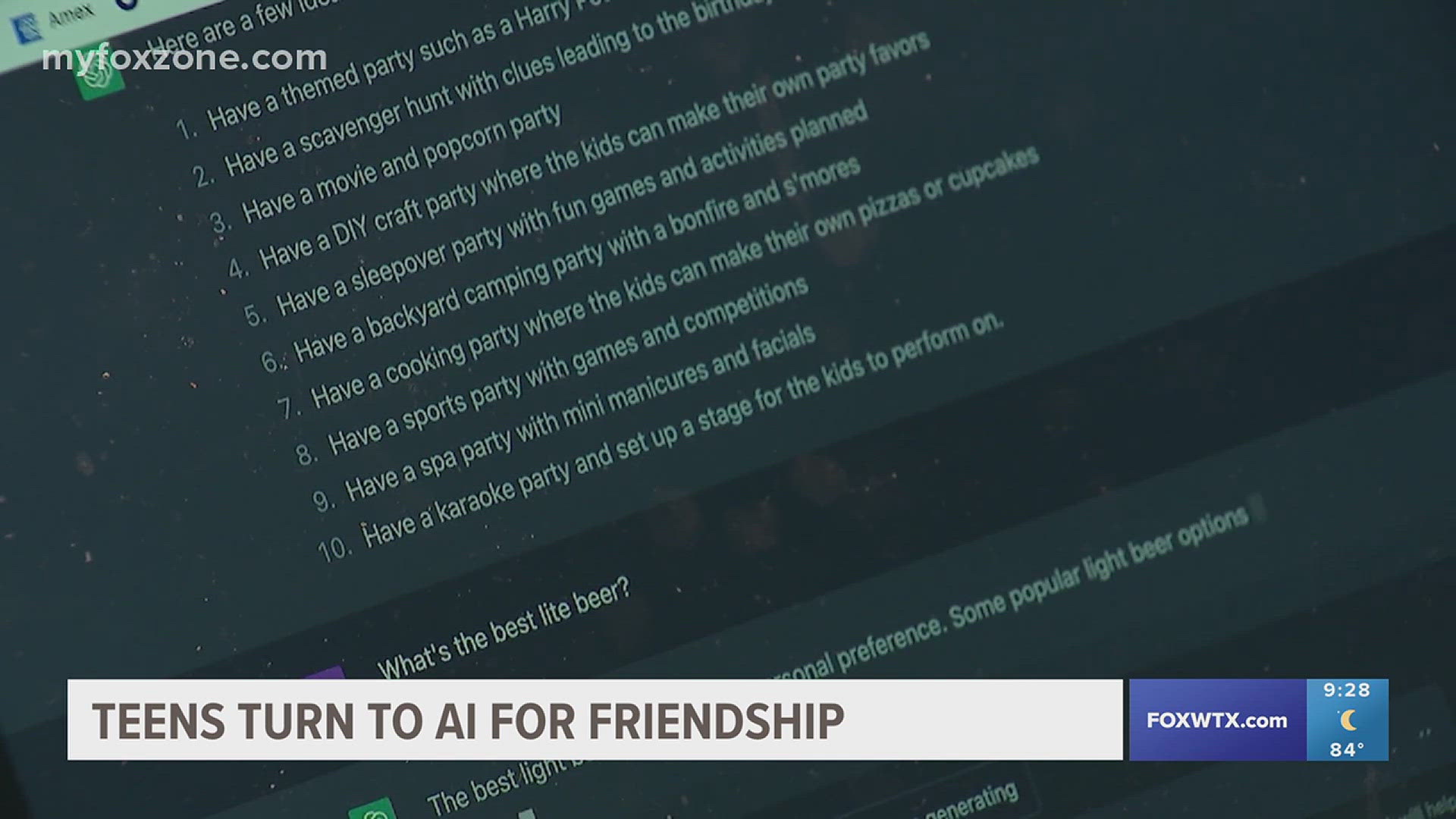

In the U.S., more than 70% of teens have used AI chatbots for companionship and half use them regularly, according to a recent study from Common Sense Media, a group that studies and advocates for using digital media sensibly.

Robbie Torney, the group’s director of AI programs, was also set to testify Tuesday, as was an expert with the American Psychological Association.

The association issued a health advisory in June on adolescents’ use of AI that urged technology companies to “prioritize features that prevent exploitation, manipulation, and the erosion of real-world relationships, including those with parents and caregivers.”

Deaths linked to chatbots show we must urgently revisit what counts as ‘high-risk’ AI

Last week, the tragic news broke that US teenager Sewell Seltzer III took his own life after forming a deep emotional attachment to an artificial intelligence (AI) chatbot on the Character.AI website.

As his relationship with the companion AI became increasingly intense, the 14-year-old began withdrawing from family and friends, and was getting in trouble at school.

In a lawsuit filed against Character.AI by the boy’s mother, chat transcripts show intimate and often highly sexual conversations between Sewell and the chatbot Dany, modelled on the Game of Thrones character Danaerys Targaryen. They discussed crime and suicide, and the chatbot used phrases such as “that’s not a reason not to go through with it”.

This is not the first known instance of a vulnerable person dying by suicide after interacting with a chatbot persona. A Belgian man took his life last year in a similar episode involving Character.AI’s main competitor, Chai AI. When this happened, the company told the media they were “working our hardest to minimise harm”.

In a statement to CNN, Character.AI has stated they “take the safety of our users very seriously” and have introduced “numerous new safety measures over the past six months”.

In a separate statement on the company’s website, they outline additional safety measures for users under the age of 18. (In their current terms of service, the age restriction is 16 for European Union citizens and 13 elsewhere in the world.)

However, these tragedies starkly illustrate the dangers of rapidly developing and widely available AI systems anyone can converse and interact with. We urgently need regulation to protect people from potentially dangerous, irresponsibly designed AI systems.

How can we regulate AI?

The Australian government is in the process of developing mandatory guardrails for high-risk AI systems. A trendy term in the world of AI governance, “guardrails” refer to processes in the design, development and deployment of AI systems. These include measures such as data governance, risk management, testing, documentation and human oversight.

One of the decisions the Australian government must make is how to define which systems are “high-risk”, and therefore captured by the guardrails.

The government is also considering whether guardrails should apply to all “general purpose models”. General purpose models are the engine under the hood of AI chatbots like Dany: AI algorithms that can generate text, images, videos and music from user prompts, and can be adapted for use in a variety of contexts.

In the European Union’s groundbreaking AI Act, high-risk systems are defined using a list, which regulators are empowered to regularly update.

An alternative is a principles-based approach, where a high-risk designation happens on a case-by-case basis. It would depend on multiple factors such as the risks of adverse impacts on rights, risks to physical or mental health, risks of legal impacts, and the severity and extent of those risks.

Chatbots should be ‘high-risk’ AI

In Europe, companion AI systems like Character.AI and Chai are not designated as high-risk. Essentially, their providers only need to let users know they are interacting with an AI system.

It has become clear, though, that companion chatbots are not low risk. Many users of these applications are children and teens. Some of the systems have even been marketed to people who are lonely or have a mental illness.

Chatbots are capable of generating unpredictable, inappropriate and manipulative content. They mimic toxic relationships all too easily. Transparency – labelling the output as AI-generated – is not enough to manage these risks.

Even when we are aware that we are talking to chatbots, human beings are psychologically primed to attribute human traits to something we converse with. That is why I do not talk to Computers or let them talk to ME

The suicide deaths reported in the media could be just the tip of the iceberg. We have no way of knowing how many vulnerable people are in addictive, toxic or even dangerous relationships with chatbots.

Guardrails and an ‘off switch’

When Australia finally introduces mandatory guardrails for high-risk AI systems, which may happen as early as next year, the guardrails should apply to both companion chatbots and the general purpose models the chatbots are built upon.

Guardrails – risk management, testing, monitoring – will be most effective if they get to the human heart of AI hazards. Risks from chatbots are not just technical risks with technical solutions.

Apart from the words a chatbot might use, the context of the product matters, too. In the case of Character.AI, the marketing promises to “empower” people, the interface mimics an ordinary text message exchange with a person, and the platform allows users to select from a range of pre-made characters, which include some problematic personas.

Truly effective AI guardrails should mandate more than just responsible processes, like risk management and testing. They also must demand thoughtful, humane design of interfaces, interactions and relationships between AI systems and their human users.

Even then, guardrails may not be enough. Just like companion chatbots, systems that at first appear to be low risk may cause unanticipated harms.

Regulators should have the power to remove AI systems from the market if they cause harm or pose unacceptable risks. In other words, we don’t just need guardrails for high risk AI. We also need an off switch.

If this article has raised issues for you, or if you’re concerned about someone you know, call Lifeline on 13 11 14.

spacer

Chat

Chat  Chatbots

Chatbots

A new study

A new study